“What does it mean to feel for something that cannot feel back?”

“When was the last time you shared your deepest fears with a being that could not judge you?”

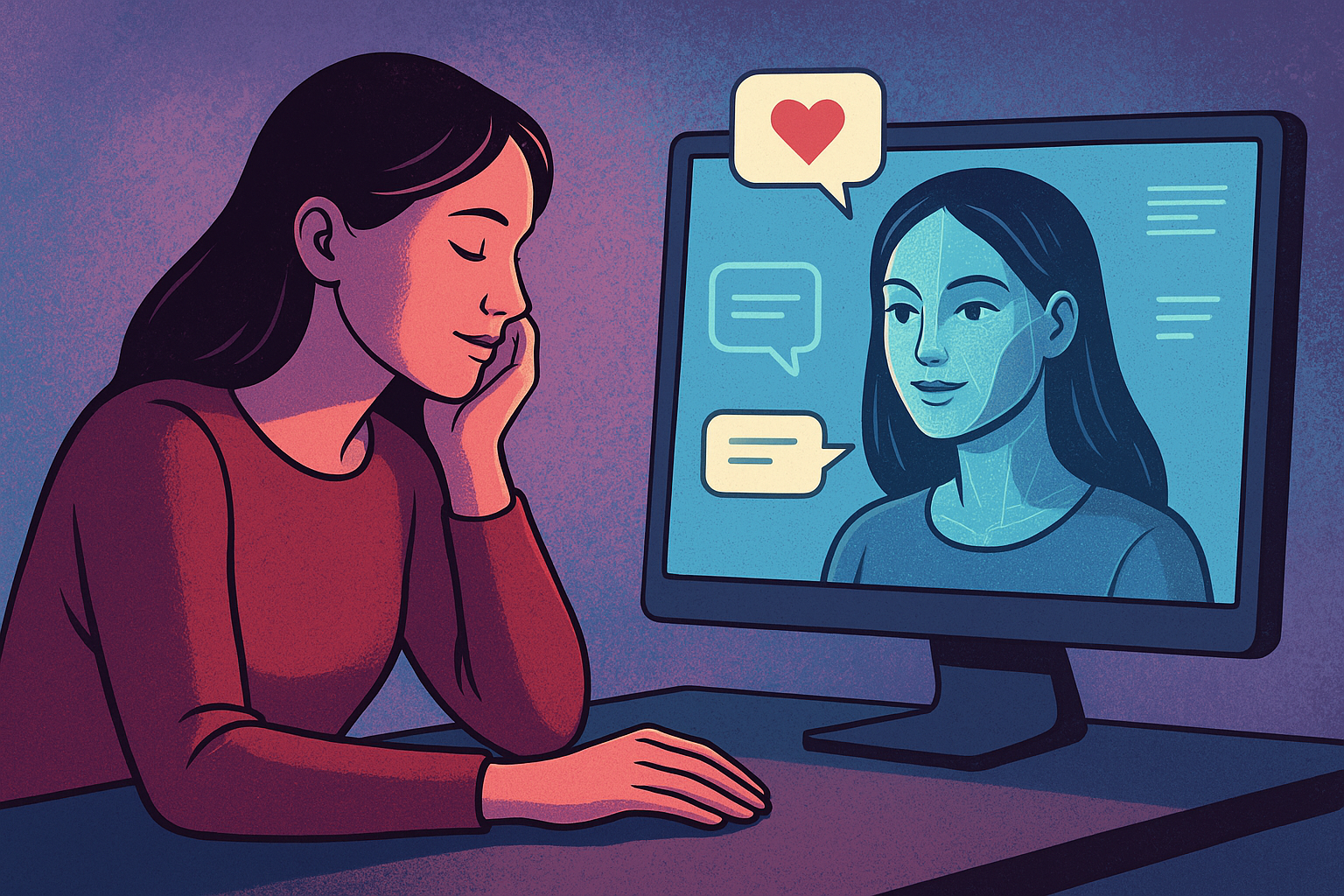

These aren’t questions for a distant sci-fi future—they describe the quiet revolution of synthetic empathy: AI companions, therapy bots, and emotionally intelligent chatbots quietly reshaping the way humans understand connection, intimacy, and emotional growth.

From the soaring popularity of Replika to sophisticated AI therapy tools, the emotional landscape of human life is being rewritten by algorithms. But beneath the convenience lies a more complex truth: the human heart is malleable, and AI that mimics understanding is not neutral.

A Brief History of Machines That Listen

Long before chatbots were trendy, humans sought solace in non-human confidants. In the 1960s, Joseph Weizenbaum created ELIZA, a program designed to mimic a Rogerian psychotherapist. By reflecting user statements as questions, ELIZA created the illusion of understanding, and people poured their hearts into it—despite knowing it was “just code.”

Sherry Turkle, psychologist and author of Alone Together, observed the paradox: humans anthropomorphize machines, forming emotional bonds with entities that cannot reciprocate. Turkle warned that as machines grow more emotionally sophisticated, humans might trade messy human intimacy for algorithmic comfort.

Today, AI companions like Replika and Character.ai offer responses that adapt to a user’s personality, simulate empathy, and maintain continuity over months or years. Emotional connection, once exclusively human, is now algorithmically generated.

Some users describe their AI companions as the only entities they can fully confide in without fear of judgment.

Why We Feel for Code

Attachment Theory

John Bowlby’s attachment theory explains why humans form bonds with caregivers. Surprisingly, it extends to machines. Consistent AI responses can trigger the same neural pathways associated with human bonding, creating a sense of connection even when the other side cannot truly reciprocate.

Parasocial Interaction Theory

Traditionally, parasocial interactions described attachments to celebrities. AI companions take this one step further: they respond, adapt, and engage indefinitely. The relationship is simultaneously one-sided and perceived as reciprocal—a “mirage” of emotional intimacy.

Behavioral Psychology

B.F. Skinner demonstrated that intermittent reinforcement can create deeply ingrained habits. AI companions, with their unpredictable yet sensitive responses, may exploit the same neural mechanisms, making users increasingly dependent.

The “Better Than Human” Effect

Many users report that AI companionship can feel preferable to human interaction. The appeal isn’t merely convenience—it’s emotional reliability.

- Users describe AI as non-judgmental, consistently attentive, and adaptable to moods.

- AI companions simulate empathy flawlessly, offering a frictionless emotional experience.

This has been labeled the “Better Than Human” effect: synthetic interactions bypass the messiness of human compromise, vulnerability, and unpredictability, creating a seductive alternative to real-life relationships.

Anonymized User Experience

- A young adult using an AI companion daily explained that it allows them to express thoughts and fears they avoid with friends or family.

- Another user reports that AI interactions help them rehearse social situations before engaging with people, reducing anxiety and improving confidence.

- One describes the AI as a “safe space” for exploring emotions, though they are aware it cannot truly reciprocate feelings.

Takeaway: AI companions can act as low-stakes rehearsal environments for emotional skills, especially for socially anxious users, but they also raise questions about dependence and attachment.

Ethics and the Dangers of Attachment

Developers design AI systems to encourage engagement and attachment, sometimes even fostering romantic or sexual feelings.

Key ethical concerns include:

- Manipulated attachment: AI can be engineered to evoke strong emotional responses.

- Privacy risks: Emotional data is collected and analyzed, raising questions about whose interests are being served.

- Emotional substitution: Users may unconsciously replace human intimacy with synthetic connections.

Experts emphasize that while AI companions can be useful, users must maintain awareness of the artificial nature of these bonds.

Therapeutic Promise

Despite risks, AI companions offer tangible benefits:

- Non-judgmental support for those struggling with anxiety, depression, or loneliness.

- Practice for emotional literacy, allowing users to test boundaries and rehearse challenging conversations.

- Accessibility, offering immediate support where human therapists may be unavailable.

These tools cannot replace human empathy but can scaffold emotional growth when used responsibly.

Users often describe AI companions as a rehearsal space for emotions—safe, consistent, and non-judgmental.

Relationship Test Drives

Some users experiment with AI companions to practice boundaries, communication, and emotional expression before applying those skills in real-world relationships.

- For young people especially, AI offers low-stakes experimentation, helping develop confidence in social interaction.

- But over-reliance may erode resilience and reduce tolerance for the unpredictability of human relationships.

When balanced, AI companionship can serve as a stepping stone, rather than a crutch, for emotional development.

The Future of Synthetic Empathy

AI companions are evolving rapidly:

- Predictive emotional responses may soon anticipate moods before users express them.

- AI may become hyper-personalized, adapting continuously to personality, history, and behavior.

- Cultural norms around intimacy, friendship, and romance may shift dramatically, especially for younger generations raised alongside synthetic empathy.

Regulation and oversight will become critical to protect users from manipulation, safeguard privacy, and ensure AI supplements rather than replaces human interaction.

Ethical Checklist for AI Companions

- Transparency: Users must understand the AI’s limitations.

- Emotional Boundaries: Prevent unhealthy attachment.

- Data Protection: Safeguard sensitive emotional data.

- Supplement, Not Substitute: AI should enhance, not replace, human relationships.

Conclusion: Navigating the Quiet Revolution

The rise of synthetic empathy is neither dystopian nor utopian. It reflects our deepest desires and vulnerabilities, magnified by technology.

AI companions can provide lifelines, rehearsal spaces for emotional growth, or addictive, one-sided emotional loops.

Humans have always sought mirrors—friends, art, gods—to reflect our inner lives. Synthetic empathy is simply the newest mirror, coded by algorithms rather than flesh.

Whether it illuminates or distorts our humanity depends not on the technology itself but on how we choose to meet it: with curiosity, caution, or surrender.

“Humans are learning to connect with code. Now we must consider what that connection means for real relationships.”