- Can a machine act intelligently? Can it solve all the problems that any human being would solve by thinking?

- Are human intelligence and artificial intelligence the same? Can we think of the human brain as a computer?

- Can a machine have a human-like mind, state of mind, and consciousness?

- Can a machine be aware of itself as an object of thinking?

These are all questions that researchers, scientists, and philosophers of artificial intelligence have asked themselves over the years, and have reflected their divergent positions. Over time, tests, trials, and theories were born to help us define the concepts of intelligence, consciousness, and the machine.

- The Turing Test: If a machine behaves as intelligently as a human, then it is as intelligent as a human.

- Dartmouth’s Proposal: Every feature of intelligence or aspect of learning can be defined with such precision that automatism can be created to simulate it.

- Newell and Simon’s Physical Symbol System Hypothesis: A physical symbol system has the necessary and sufficient means to produce a complete intelligent activity.

- Searle’s Hypothesis: A properly programmed computer, with adequate inputs and outputs, is a mind exactly the same as that of a human being.

- The Hobbes Mechanism: Reason is nothing other than the calculation (that is the addition and subtraction) of the sequences of names with which it is agreed to indicate and express our thoughts.

Turing test

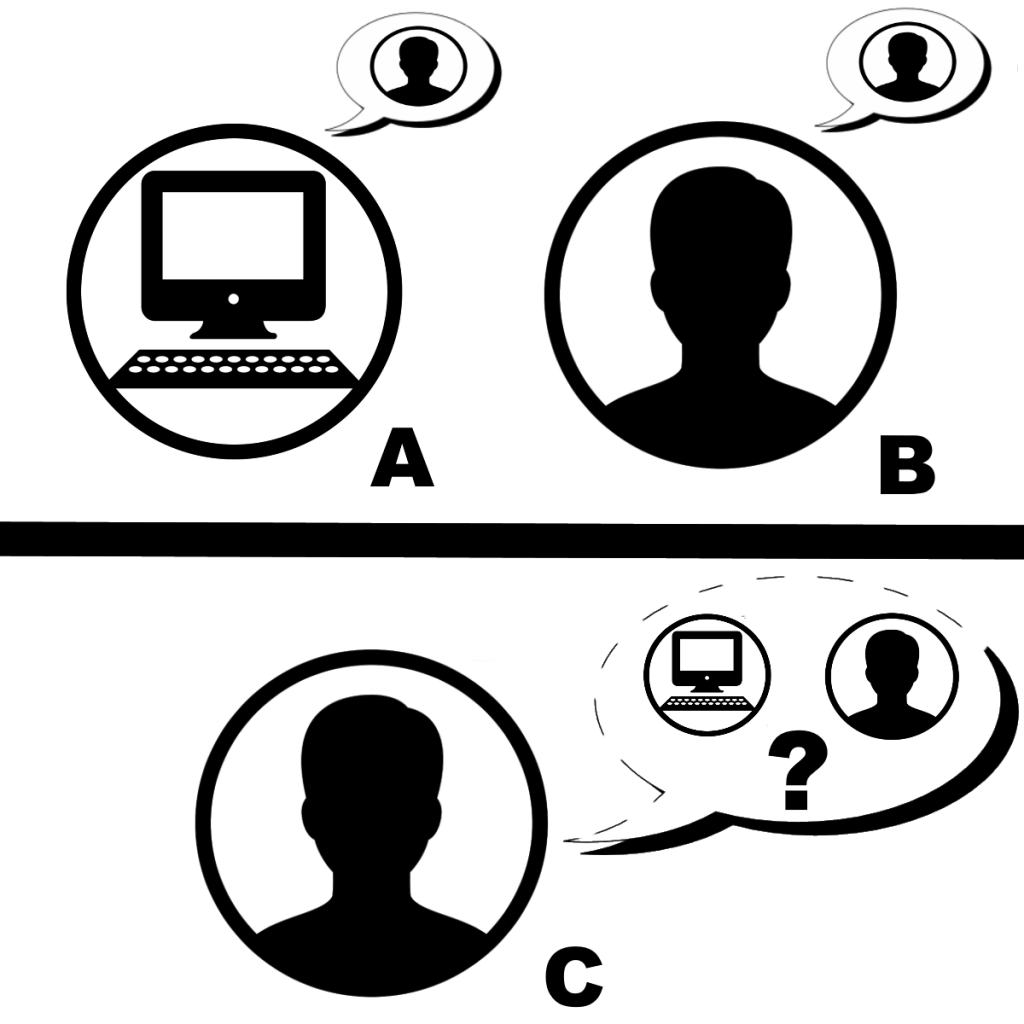

Alan Turing, in his historic 1950 seminal paper Computing Machinery and Intelligence, reduces the problem of defining intelligence to a simple question of dialogue. It suggests that if a machine can answer all questions in the same words as a normal person, then it can be called an intelligent machine. A modern version of his test would use a chat room, in which one of the participants is a computer program (A) and the other is a real person (B). The test is passed if the interrogator (C) can’t tell which of the two participants is a human being.

Turing argues that if a machine behaves intelligently like a human being, then it is as intelligent as a human being, and if the goal is to create machines that are smarter than humans, why insist that machines should be like people? In this regard, Stuart Russell, in Artificial Intelligence: A Modern Approach, a standard text in AI translated into 13 languages and used in over 1300 universities in 116 countries, states that: “Aeronautical engineering texts do not define the goal of their field as making machines that fly so exactly like pigeons that they can fool even other pigeons.”

Dartmouth Conference

The Dartmouth conference refers to the Dartmouth Summer Research Project on Artificial Intelligence, held in 1956, and regarded as the official event that marks the birth of this research field.

The event is proposed in 1955 by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, in a 17-page informal document known as the ‘Dartmouth proposal‘. The document introduces for the first time the term artificial intelligence, and justifies the need for the conference with the following assertion:

The study will proceed on the basis of the conjecture that, in principle, every aspect of learning or any other characteristic of intelligence can be described so precisely that a machine can be built that simulates them.

Dartmouth Proposal, p. 1.

The paper then discusses what the organizers consider to be the main themes of the research field, including neural networks, computability theory, creativity, and natural language processing.

Is it possible to create a machine that can solve all the problems that humans solve using their intelligence? The question defines the scope of what machines will be able to do in the future and points the direction of AI research. The fact that a machine can think as a person thinks is not relevant.

Not everyone agrees and arguments contrary to this basic principle must first and foremost demonstrate that building an AI system is impossible, because there is a practical limit to the capabilities of computers and because there are specific qualities of the human mind that cannot be duplicated by a machine or by current AI search methods. For arguments in favor of this basic principle, the feasibility of such a system must be demonstrated. The first step in answering the question is to give a clear definition of intelligence.

Newell and Simon’s Hypothesis

In 1963, A. Newell and H.A. Simon proposed that the manipulation of symbols was the essence of human and artificial intelligence. They wrote that “a physical symbol system has the necessary and sufficient means for general intelligent action. This is a strong hypothesis. It means that any intelligent agent is necessarily a physical symbol system.” This implies that human thought is a sort of operator of symbols since they are necessary for intelligence and that machines can be intelligent since they are always sufficient for intelligence.

H. Dreyfus argued that human intelligence and experience depended primarily on unconscious instincts rather than conscious symbolic manipulation and argued that these unconscious abilities would never be part of the formal rules.

Dreyfus’s argument was anticipated by Turing who argued that “it is not possible to produce a set of rules purporting to describe what a man should do in every conceivable set of circumstances”, and “we cannot so easily convince ourselves of the absence of complete laws of behavior as of complete rules of conduct.” The only way to find such laws is by scientific observation.

Searle’s Hypothesis

John Rogers Searle, an American philosopher widely noted for contributions to the philosophy of language, philosophy of mind, and social philosophy, defined the philosophical position of strong AI that materializes as a question: Can a system of physical symbols have a spirit and a state of mind? “The computer is not merely a tool in the study of the mind, rather the appropriately programmed computer really is a mind in the sense that computers given the right programs can be literally said to understand and have other cognitive states.” The definition depends on the distinction between simulating a mind and actually having a mind. In fact, Searle distinguished this position from what he called weak AI: Can a system of physical symbols act intelligently? Searle defined the terms for distinguishing strong AI from weak AI and argued that even if we had a computer program that acted exactly like the human mind, there would still be a difficult-to-answer philosophical question: Can machines think? Or in other words: Can machines be conscious?

Searle then stated that “consciousness is an intrinsically biological phenomenon, it is impossible in principle to build a computer (or any other nonbiological machine) that is conscious,” a thesis that specifically contradicts the possible existence of a “strong AI”. In the Chinese room argument, he conceptualized that a digital computer executing a program cannot have a “mind”, “understanding” or “consciousness”, regardless of how intelligently or human-like the program may make the computer behave. The argument was presented in the paper, “Minds, Brains, and Programs“, published in Behavioral and Brain Sciences in 1980.

Consciousness

Some people believe that consciousness is an essential element of intelligence, even if their definition of consciousness is very close to intelligence. To be able to answer the question of whether machines can be conscious, one must first define the concepts of mind, mental states, and conscience.

Philosophers and neuroscientists say that these words refer to everyday living to put a thought in the head, such as perception, a dream, an intention, a plan or to say something or understand something. Philosophers call it the difficult problem of consciousness, today’s version of a classic philosophical problem, the mind-body problem. Related to it, there is the problem of meaning or understanding: what is the connection between our thoughts and what we think? A final problem is “experience”: Do two people who see the same thing have the same experience? Are there things in their heads that may differ from one person to another?

But all this does not detract from the big philosophical question: Can a computer program, through the combination of binary digits 0 and 1, transmit to neurons the ability to give life to mental states such as cognition or perception and ultimately reproduce the experience of consciousness?

In the philosophy of mind, the computational theory of mind (CTM), also known as computationalism, holds that the human mind is an information processing system and that cognition and consciousness together are a form of computation, then the relationship between mind and brain is similar, if not identical, to the relationship between a running program and a computer. This idea takes philosophical roots from Leibniz, Hume, and Kant.

One of the earliest proponents of the computational theory of mind was Thomas Hobbes, who said, “by reasoning, I understand computation. And to compute is to collect the sum of many things added together at the same time, or to know the remainder when one thing has been taken from another. To reason, therefore, is the same as to add or to subtract.”

The latest version of the theory was developed by philosophers Hillary Putnam (in 1967) and J. Fodor (in the 1960s, 1970s, and 1980s): If the human brain is a type of computer, can computers be intelligent and aware, answering questions about practical and philosophical AI? and as for the practical question of AI: Can a machine exhibit complete, general intelligence in the broadest sense of the term?

Roger Penrose, in Mathematical Intelligence (1994), proposed the idea that the human mind does not use a knowably sound calculation procedure to understand and discover mathematical intricacies. This would imply that a normal Turing complete computer would not be able to ascertain certain mathematical truths that human minds can, thus suggesting that a human-like mind and human-like consciousness are not possible, or that the human mind functioning must be still fully understood.

Turing notes that many topics remain to be defined. “Be kind, resourceful, beautiful, friendly, have initiative, have a sense of humor, tell right from wrong, make mistakes, fall in love, enjoy strawberries and cream, make someone fall in love with it, learn from experience, use words properly, be the subject of its own thought, have as much diversity of behavior as a man, do something really new,” are arguments often based on naive assumptions and are disguised forms of the argument of conscience. If emotions are defined solely by their effect on behavior or on how they function within an organism, then they can be thought of as a mechanism that uses an intelligent agent to maximize the interest derived from one’s actions. Fear generates speed, empathy is instead the essential component of positive human-machine interaction. Emotions, however, can be defined by their subjective quality and sensation.

The question of whether the machine really feels an emotion, or it acts as if it feels an emotion, brings us back to the philosophical question: Can a machine be self-aware? Turing condenses all the other properties of human beings into the question: Can a machine be the subject of its own thought? Can it think of itself? A program that can report on its internal states and processes, in the simple sense of a debugger program, can certainly be written. Turing asserts “a machine can undoubtedly be its own subject matter.”

To the question, can a machine take me by surprise? Turing argues that it is possible. He notes that, with sufficient memory capacity, a computer can behave in an astronomical number of different ways.

Conclusion

In conclusion, today, as artificial intelligence is evolving faster and faster, it is good to answer once and for all the classic philosophical and existential questions that are fundamental to establish which directions development will take in the future, how our relationship with artificial intelligence will evolve, and what the rights and duties of a machine might be. It is imperative to respond to those questions now to create a unified framework of understanding, because later, in the classic game of running after events and situations that the human species so much likes, it will not make sense. “Oh dear, we have a self-aware machine. What do we do now?“